A New Kind of Data: What You Need to Know about Google Search Console Crawl Stats

It’s all about the stats and measurable data. If we can’t measure performance in a quantifiable way, then it becomes difficult to have benchmarks for success and failure. As a new business owner or a small business marketer, all of the different measurable data you can look at might seem daunting, but there are some metrics that are more important than others to pay attention to. We often talk about Google Analytics stats, but what about looking specifically at Google’s Search Engine Crawl Stats? This is an area that can teach a business owner a lot of valuable information without a lot of effort.

What are Google Search Console Crawl Stats?

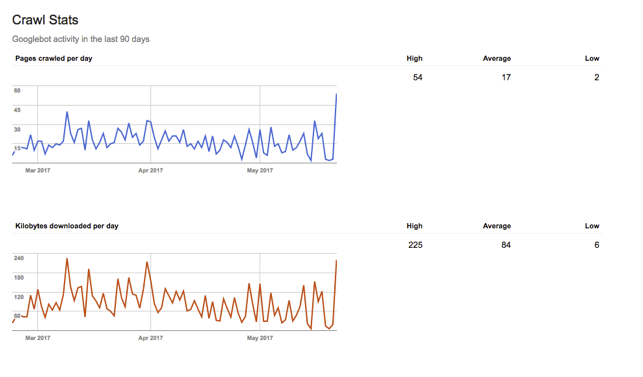

To put it simply, Google Crawl Stats show the activity of Google Bots on your website over the past 90 days. This is obviously key in assessing the kind of information that Google is gathering from your site, whether this is CSS, JavaScript, Flash, and PDF files, or images. And keep in mind—Google Analytics can’t offer you this same information, meaning in the past we were forced to make assumptions based on indirectly related data.

You can find your crawl stats at Google Search Console. On the side menu look at the dashboard section> then select crawl> under the Crawl subheading you will find “Crawl Stats” (see the screenshots below):

Your crawl stats after you click should look like this:

Your crawl stats after you click should look like this:

Your crawl stats after you click should look like this:

Generally, Crawl Stats Should be Consistent

Generally speaking there is no “ideal” crawl number—you want to aim for consistency. Google claims this means stability in activity over a two week period. Of course, there are some instances where you can expect to see spikes (adding a ton of content to your site, for example). There are also a lot of reasons that your crawl rate can drop. Read on to learn a little more about those cases.

When Your Crawl Stats Spike!

For whatever reason we are programmed to look at “spikes” in analytics as a positive in digital marketing, but in reality an increase in activity isn’t always inherently positive. In many cases a Google Crawls Stats spike could just be indicative of sudden change in the amount of content on your site. Unfortunately, an increase in Googlebots is not always a positive thing, as it could affect the speed and functionality of your site.

If you have just added a lot of new content (or possibly you just have a lot of informative content that is making waves and increasing your crawl rate), then not to fear—this is normal and you can anticipate a drop to normal activity shortly after the new additions. That said, there are some cases where it will not drop off as quickly as you would like. Here are some tips if that is the case:

- Make sure it is in fact Google that is crawling your site and not another user. You can learn more about checking your site logs here.

- If you need to urgently stop crawling you can enable 503 HTTP result codes for all of its requests

- If you find this is happening often, you can set a “preferred maximum crawl rate” to ensure your website is never overloaded with more than it can handle.

- If you have any invalid URLs on your site that are still active, be sure to 404 or 410 codes, or set up a 301 redirect for pages that have permanently moved to another location.

Did Your Crawl Stats Drop?

Again, consistency is key, so the flip side to craw stat spikes are crawl stat drops. There are many things that can cause crawl stats to drop, which makes it even more important to be aware of this data so that you can turn it around and get Googlebots back on your site again!

- Sometimes Googlebots are blocked from reading CSS or Java when you install a new (or very broad) robots.txt rule. Make sure if you do this that you enable Google access to this content so that it can crawl it accordingly.

- Unsupported content or broken HTML are probably the two most important. All too often we see clients who have this kind of content on their site, and consequently cannot be crawled and indexed by Google. This is obviously problematic for SEO! If you notice a drop, these are the two things you should look to fix first. One thing Google recommends is actually using Fetch for Google to see how Googlebots are actually seeing your page so if you know if there is something out of order.

- On the same note of the “preferred maximum crawl rate” setting mentioned above, you want to make sure that you did not accidentally lower this number and have actually prohibited Googlebots from crawling your site adequately.

- Now for the worst news that no one ever wants to hear. If you have noticed a sudden, or even, steady drop-off of crawl activity on your site, it may be that you’re not producing enough new and valuable content and Google has opted to just not crawl and index your site as often. The best thing to do is really look at your site and look honestly at the amount of content that you are producing that is truly valuable to your audience—chances are that if you are not nailing this, you might not want to consider taking a closer look at the content you are producing and what you can do to improve your appearance in the eyes of your audience and Googlebots!

Why Looking at Crawl Stats Can Help You SEO

We’ve now talked about the two most important aspects of looking at and interpreting your crawl stats (sudden spikes and sudden drops—i.e, a lack of consistency), and we have talked about some of the most common ways those changes to Google Crawl Stats can happen. However, you might be wondering how to connect this to your SEO and marketing efforts.

Bottom Line. If you are not getting crawled at an ideal rate, regularly, you are going to have a very difficult time actually climbing Search Engine Rankings. To optimize your SEO you need to have Googlebots on your side, looking at all of the glorious content you have produced—otherwise you are wasting your time. Out of all of the stats and analytics you could spend time looking at, Google Crawl Stats are a surefire way to know if all is well on your site, and you are visible to all who could do a Google Search for you online.

Update and Produce Valuable & Crawl-able Content. It is one thing to be able to tell if your crawl rates are in good shape—it is another to make it an actionable statistic. Your goal should always be to produce updated content that is fresh and draws your audience in, but can also be crawled and indexed by Google. Remember to use “Fetch for Google” as a way of looking at how Googlebots see your site, so you can make adjustments for awesome content that is not visible. Below is a screenshot from Marketer Doug:

The Takeaway

The key point is that Google Crawl Stats via Google Search Console can be used to identify problems on your site in a quick way, that has actionable implications for your SEO and site indexing. By interpreting the graph and noticing inconsistent spikes or dips in Googlebot activity, you can pinpoint what might need to be fixed or improved to help your SEO efforts.

Do you have any stories of Google Crawl Stats saving your site or making your content look more valuable in the eyes of Googlebots? Let us know in the comments section below.